Real-World Examples: KMS, KBS, and KWS (What They Are, How They Differ, and How They Win)

“Show me” beats “tell me” in knowledge management. You can define a KMS all day, but nothing clicks like seeing an answer appear for an agent in the middle of a ticket, or a customer solving a tricky setup in three steps, or a field tech scanning a QR code and landing on an exact procedure for that device firmware. This page is your tour—what a Knowledge Management System (KMS) looks like in practice, how it differs from a Knowledge-Based System (KBS) and a Knowledge Work System (KWS), and a set of concrete patterns you can steal.

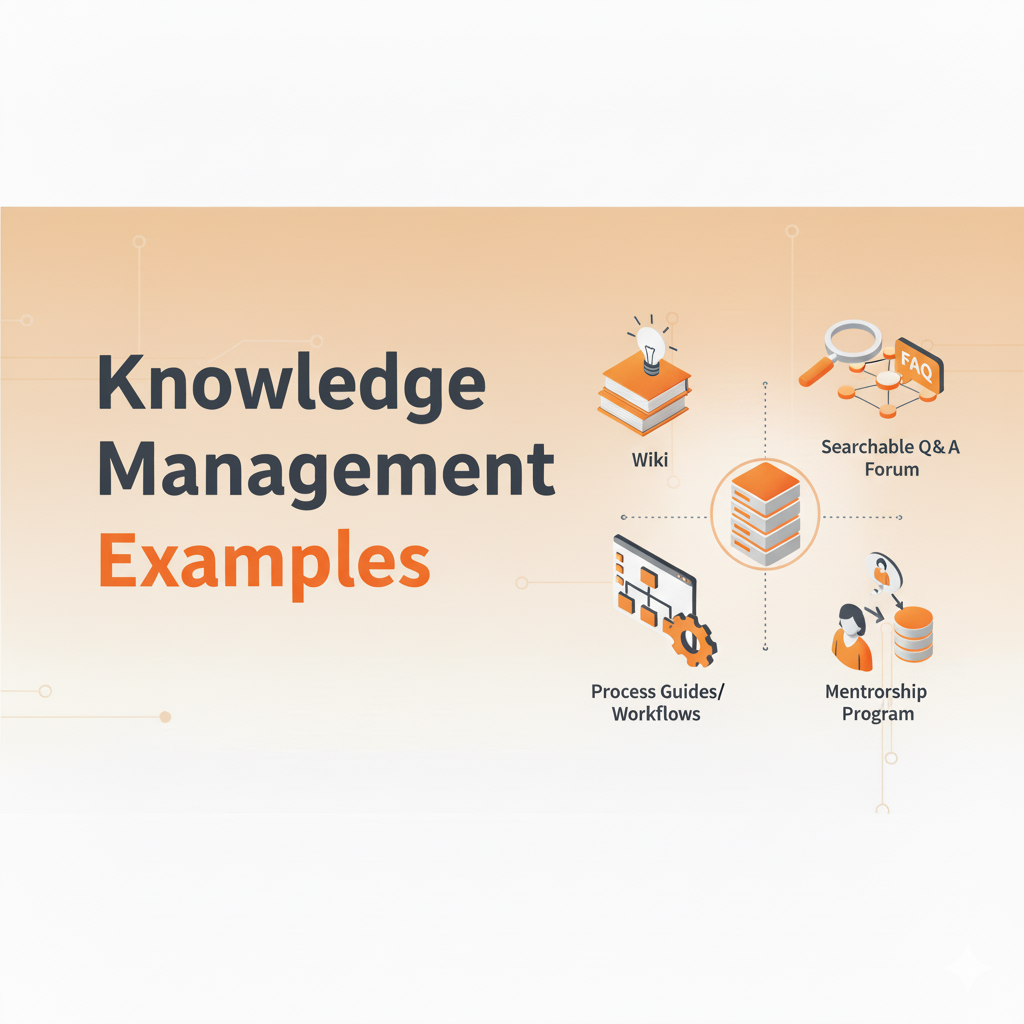

Think of these three like a stack: a KMS is the governed source of truth for reusable answers; a KBS uses explicit, often rule-driven knowledge to make or justify a decision; a KWS is the environment professionals use to create and manipulate knowledge—documents, designs, models, analyses. Most organizations run all three. The trick is to make them reinforce each other rather than compete.

Quick, plain-English distinctions

A KMS is an answer engine with governance. It captures, structures, approves, and delivers short, reusable knowledge objects—how-tos, troubleshooting guides, policy Q&A, checklists—and pushes them into portals, agent consoles, chats, and apps. The success signal is operational: faster resolutions, higher self-service solves, fewer errors, better compliance.

A KBS is a reasoning system. It applies encoded rules, heuristics, or cases to a specific input and produces a recommendation or decision, often with an explanation. Think triage trees, eligibility calculators, underwriting rules, configuration advisors. The success signal is decision quality and speed: fewer exceptions, higher first-pass approvals, consistent outcomes.

A KWS is a creation workspace. It’s where knowledge workers produce new knowledge—spreadsheets, designs, code, research notes, product specs—and collaborate. Think office suites, design tools, data notebooks, wikis. The success signal is throughput and quality of knowledge creation.

If you’re optimizing customer support deflection, you reach for the KMS. If you need a guided decision about eligibility or a step sequence that adapts to answers, you blend KMS + KBS. If your pain is scattered drafts and version chaos, you bring in a KWS and give it a clean handoff into the KMS once knowledge is stable.

Four canonical KMS examples (internal, external, partner, field)

You asked for examples; here are four clear, copy-and-paste patterns. Each uses a structured answer published once and reused everywhere.

1) Internal: Agent-Assist Troubleshooting Card

A new “token expired” sign-in error spikes after a release. A knowledge owner interviews an SME for 15 minutes and drafts a three-step guide in the troubleshooting template—symptoms, likely causes, steps, “if not resolved.” They tag the error string, audience “internal,” and product version. After approval, the same answer renders as a compact agent-assist card inside the ticket form. When a case category or error text matches, the card appears automatically. Over a week, article-assisted resolutions climb and AHT falls on that pattern. A public variant ships later with two steps and brand-plain language for the help center.

Why it works: short, scoped, tagged; one canonical answer, multiple surfaces; measured against real outcomes.

2) External: Customer Portal + Virtual Agent

A self-service guide explains how to pair a wearable with two mobile OS versions. The owner uses a how-to template with version facets and gifs, plus a tiny decision aid for “pair or re-pair?” The portal renders the full guide. The virtual agent pulls a conversational rendering of the same object—just the step set relevant to the user’s device and OS—and links to the canonical article if the user wants more detail. The team tracks solves without escalation and tunes synonyms weekly as new phrasing appears.

Why it works: one answer, two presentations; versioning prevents drift; conversation stays grounded in governed content.

3) Partner: Program Q&A with Controlled Visibility

A channel program changes deal registration rules. Instead of emailing a PDF, the team publishes a policy Q&A with examples and edge cases. Visibility is set to partner; internal readers see extra footnotes, and public users see nothing. The same object powers a partner portal page and a widget in the partner support tool. Owners adjust language after the first week based on questions; exception tickets drop, and time-to-enable for new partners shrinks.

Why it works: audience-aware variants from a single source; provenance and effective dates are visible; updates propagate instantly.

4) Field/Manufacturing: QR-Launched Standard Work

A pump alignment procedure exists as a scan of a decade-old manual. The team converts it to standard work: 10 steps with labeled photos, torque specs, and model/firmware facets. A QR code on the chassis resolves to the exact variant. Field tablets cache content for offline use. Repeat truck rolls fall and first-time fix rate rises. When engineering issues an ECR, the owner updates step 6 and the next review rolls forward.

Why it works: images plus steps; offline delivery; version facets route to the right procedure without forks.

Three KBS examples where rules matter

A KBS shines when choices must be justified.

Eligibility & Prior Authorization. Revenue cycle teams encode payer rules into a decision tree with supporting documentation lists. Staff answer a few questions; the system returns “eligible / not eligible” with the exact documents needed. The KBS records its path for audit; the KMS hosts the human-readable guide that explains policy intent. First-pass approvals rise and denials citing “missing documentation” fall.

IT Known-Error Workaround Selector. Problem management encodes symptoms and environments into a case-based KBS. Given an error signature and CI, it suggests the most likely workaround with confidence. The KMS stores the detailed workaround guide and links to the change that will remove the workaround eventually. MTTR drops while the fix is being engineered.

Configuration Advisor (B2B). Sales engineers answer a small set of questions and a KBS recommends a supported configuration, flagging incompatible options. It outputs a short bill of materials and links to KMS install guides and policy Q&A on support boundaries. Returns and post-sale rework decline.

Pattern: let the KBS compute or reason; let the KMS explain and govern. Keep them linked so provenance and audit survive.

Two KWS examples that feed the KMS

Design/Research Workspace → KMS. Product squads work in a KWS (docs, whiteboards, research repos). At the end of a sprint, they extract customer-facing how-tos and agent playbooks into the KMS using templates. The KWS stays messy by design; the KMS stays polished and governed. Internal confusion drops because the official answers live in one place.

Change & Post-Incident Reviews → KMS. Engineers write PIRs in a KWS. A KM steward harvests known errors, standard changes, and rollback checklists into the KMS. The KWS preserves discovery; the KMS operationalizes it. The next incident resolves faster because the answer exists.

Putting it together: a blended scenario

A new OS update breaks SSO for a subset of users. The devs post a root-cause sketch in the KWS and a temporary mitigation. A KMS owner drafts a troubleshooting guide and a short policy note explaining risk. A KBS rule routes complex cases to the mitigation and simpler cases to a reset. The virtual agent consumes a conversational rendering from the KMS with guardrails (“if these warnings appear, escalate”). Over ten days, the mitigation ships and the known error retires. Metrics show deflection up, AHT down, and reopens halved for the impacted segment. The audit log links user-visible guidance to the change record. No heroics, just systems playing their roles.

“Is Microsoft Teams / SharePoint / Wiki X a KMS?” (and similar comparisons)

Collaboration tools and wikis are fantastic KWS surfaces. They are not, by themselves, a KMS because they lack lifecycle governance, audience-aware reuse, answer-centric templates, and outcome analytics. You can—and should—let teams draft and discuss in a KWS, then move the stable, reusable piece into the KMS where ownership, approvals, review dates, and delivery to multiple surfaces keep truth synchronized. Likewise, a CMS is great at publishing; your KMS plugs into it (or replaces its article layer) when you need governed answers and outcome metrics, not just pretty pages.

What “the four examples of KMS” often really means

When people ask for “four examples,” they’re usually hunting for use cases, not product names. Use cases that nearly every organization can implement in month one:

- Agent-assist troubleshooting for the top three contact drivers.

- Customer portal how-tos for the top three self-service topics.

- Policy Q&A for the top two HR or compliance hot spots.

- Standard change or safety-critical procedure for a frequent operational task.

If you deliver those four with visible provenance, lifecycle stamps, and clear measurement, you’ve shown the KMS doing its real job.

Mini case cards you can reuse in sales, training, or leadership updates

“From 9 PDFs to 3 Cards.” A telecom condensed nine conflicting reset guides into three governed troubleshooting cards. Titles used the words agents typed; synonyms mapped legacy device nicknames. Card suggestions triggered on error codes. FCR rose seven points on the pattern and reopens fell by a third.

“Partner Drift to Single Source.” A software company had internal and partner docs that contradicted each other within weeks. They moved to one canonical answer with audience gating. Exceptions dropped 40%, and the partner NPS recovered in a quarter.

“From Denials to Decisions.” A health network encoded three high-pain payer rules as short decision aids plus checklists, all linked to source bulletins. First-pass approvals rose 12% and denial appeals time shrank by days.

“QR + Offline in the Field.” An equipment maker replaced a 42-page PDF with a visual standard-work guide, tagged by model/firmware and cached offline with a QR launch. Repeat visits fell 31% and a near-miss hazard disappeared after a warning moved to screen one.

Drop these into your proof pack; they’re pattern-complete and map directly to outcomes.

How to build your own examples library (and keep it useful)

A good examples library is small, visual, and alive. Start by picking one scenario per function—support, HR, IT, field, partner. For each, keep four artifacts: a before snapshot (the messy reality), the governed answer as it lives in the KMS (owner and dates visible), a where it shows up screenshot for each surface (agent, portal, chat, app), and an after chart with a date-stamped movement (article-assisted resolutions, deflection, MTTR, first-time fix). Keep a single page per example. When you update the answer, the example updates itself because you link to the canonical article, not a static export. Over a quarter you’ll have a gallery leadership can flip through to understand value without reading a manual.

Common pitfalls (and the easy way out)

A classic fail is mistaking the KWS for the KMS and leaving the “answer” buried in a discussion thread. Fix it with a frictionless handoff: “suggest article” buttons in chat and ticket tools that open the KMS template with context prefilled. Another is forking: internal, partner, and public copies that drift. Fix it by keeping one canonical answer with audience-aware sections and permissioning. A third is encyclopedias masquerading as answers; they rank well but rarely help. Split them into task-sized units and crosslink. The last is no outcomes, just page views. Decide up front which metric each example intends to move and wire that signal before you publish.

Where AI fits (and why provenance still wins)

AI makes examples easier to produce and better to consume. Use it to draft from long sources into your templates, to propose synonyms that match the language users actually type, and to render conversational snippets for chat—always linked back to the canonical source so users can trust what they’re seeing. Use semantic search to catch messy phrasing and rerank by observed solves. Keep a human in the approval seat, especially where safety, policy, or money is involved. The point of AI here isn’t to invent knowledge; it’s to surface and shape what you already know faster and more consistently.

A final checklist to sanity-check any example

When you assemble an example, ask five questions. Is the answer object short, structured, and scoped to one job? Is provenance visible—owner, last/next review, change log—and, if needed, a link to the governing source? Does it render in more than one surface without copy-paste (agent card, portal page, chat snippet, in-app hint)? Is the language aligned with the way users actually ask (titles, tags, synonyms)? And can you show a movement that matters—assisted resolutions, deflection, MTTR, first-time fix, exception rate? If you can tick those boxes, you’ve got a real example, not a screenshot.

Latest Insight

Practical AI for NERC CIP Compliance

Is Your Knowledge Ready for AI

.svg)

.svg)